Quantum AI: The Next Frontier in Computing

Exploring how quantum computing and artificial intelligence together could redefine the future of technology, through the lens of LupoToro Group’s R&D teams.

As we enter the midpoint of the 2010s, technology is evolving faster than ever, shaping the way we work, think, and innovate. Two revolutionary trends stand out: advances in Artificial Intelligence (AI) — particularly new deep learning methods — and breakthroughs in Quantum Computing (QC). At LupoToro Group, our research and development teams are keenly watching the convergence of these fields. We find ourselves asking a tantalizing question: What if we could run advanced AI algorithms on a quantum computer?

This might sound like an excitable futurist’s dream or a mash-up of buzzwords. Yet, researchers and industry experts are seriously considering this idea. The motivation is simple: combining AI and quantum computing could unlock computing capabilities well beyond what either can do alone. In this article, we’ll break down the fundamentals of both technologies, explore how they might enhance each other, highlight early experiments (like quantum versions of cutting-edge AI models), and discuss the potential applications – from civilian benefits to military uses – and challenges on the road ahead. This near-future outlook, written as if in 2015, anticipates what the coming years could hold for Quantum AI.

AI’s New Superpower: Attention Mechanisms in Deep Learning

Artificial Intelligence has come a long way since its origins in the 1950s. Early AI systems were rule-based and relatively brittle, but the field has since progressed through machine learning in the 1990s to deep learning in the 2010s. Thanks to improved algorithms and more powerful processors (especially GPUs), AI now achieves remarkable feats in speech recognition, image classification, and natural language understanding. Despite these successes, today’s AI still faces some key challenges:

Data- and Power-Intensive Training: Modern AI models (particularly deep neural networks) require enormous datasets and computational power. Training large networks can take weeks and consume vast energy resources.

Optimization Bottlenecks: Much of AI development involves solving tough optimization problems, like efficiently training neural networks or tuning many hyperparameters. These tasks can be incredibly complex and time-consuming on classical computers.

Lack of Transparency: Many advanced AI models act as “black boxes.” They can make impressive predictions or decisions, but it’s difficult to interpret how they arrived at those outcomes, which is a concern for critical applications.

Computational Limits: Even our best classical supercomputers struggle with certain problem types (for example, combinatorial problems with astronomically many possibilities). There are tasks in optimization, drug discovery, and more that remain out of reach for classical computing alone.

One of the most exciting developments in AI research addressing some of these challenges is the rise of attention mechanisms. Attention mechanisms allow AI models to focus on the most relevant parts of their input data, much like how humans pay selective attention. This concept is exemplified in a new deep learning architecture emerging around this time – what some researchers are calling a “transformer.” The transformer design (still in its infancy in 2015) is poised to become state-of-the-art in natural language processing. Its core idea is an attention-based network that can weigh the importance of different input elements.

For example, consider the sentence: “She is eating a green apple.” A traditional model might process each word with equal focus or in a fixed window, but an attention-based model identifies the key pieces: eating, green, and apple. It learns that the action eating is closely connected to the object apple, while the color green is an attribute of the apple and less relevant to the action. By dynamically weighting these relationships, the model understands context in a way earlier algorithms could not. This attention mechanism mimics how humans interpret language or visuals – focusing on the most pertinent parts of an input and the connections between them.

The transformer architecture built on attention could be transformative (no pun intended) for AI. We anticipate that in a few short years, such models will enable highly sophisticated language translators and conversational agents far more coherent than those of 2015. In fact, one can imagine that by the early 2020s, we might see AI chatbots or assistants producing human-like responses, powered by these attention-centric neural networks. This progress in AI is remarkable on classical computers – but now we turn to how an entirely different computing paradigm might supercharge these capabilities.

Quantum Computing: A New Dimension of Processing Power

While AI was booming on classical silicon, quantum computing was advancing on a radically different front. Classical computers (from smartphones to supercomputers) use binary bits that are either 0 or 1. Quantum computers, on the other hand, use quantum bits or “qubits.” Thanks to principles of quantum mechanics, a qubit can exist as 0, 1, or even both at once (a superposition). Qubits can also become entangled, meaning their states can be correlated in ways that defy classical intuition. In practical terms, a set of entangled qubits can represent and process a vast number of possibilities simultaneously.

A common metaphor is a coin flip: a classical bit is like a coin that’s firmly landed on heads or tails, whereas a qubit is like a coin spinning in the air – it’s both heads and tails until observed. By leveraging superposition and entanglement, quantum computers can explore multiple solutions in parallel, rather than one-by-one as classical machines do . This makes them potentially game-changing for certain kinds of problems.

To illustrate the advantage, imagine you’re searching for a name in a totally unsorted phone book. A classical computer would check each entry one by one, a process that scales linearly with the number of entries. A quantum computer could, in theory, check all entries in quadratically fewer steps using algorithms like Grover’s search. In other words, if a classical search takes N steps, a quantum search might take roughly √N steps by effectively trying many possibilities simultaneously. This is why quantum hardware is expected to tackle problems that are impractical for classical computers – from factoring huge numbers (breaking cryptographic codes) to simulating complex molecules for chemistry and materials science.

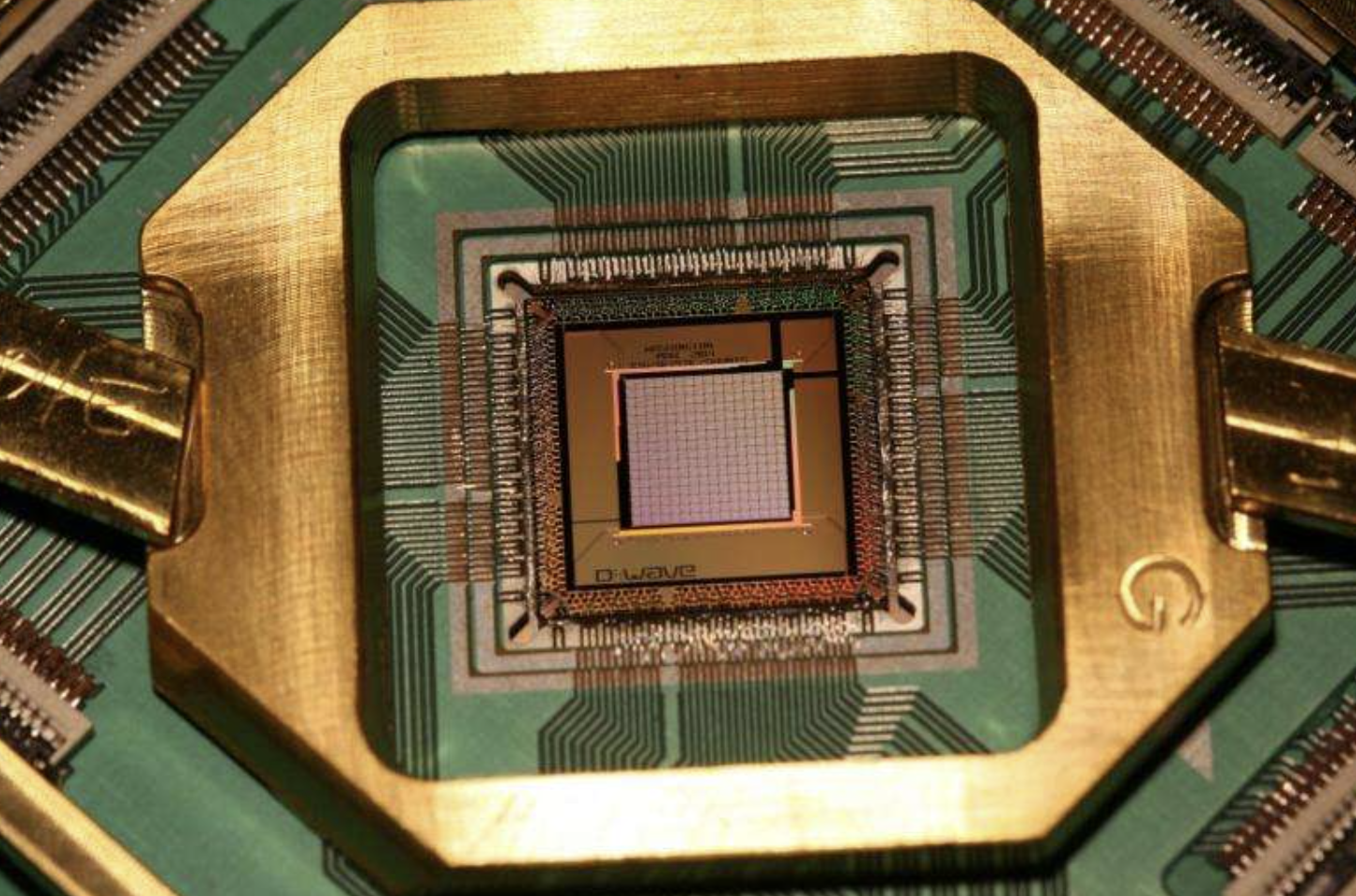

However, today’s quantum computers (in 2015) are still in their infancy. We have small prototypes with only on the order of 5–10 stable qubits in labs. They are extremely sensitive: heat, electromagnetic noise, or tiny disturbances can knock qubits out of their quantum state (a phenomenon called decoherence), causing errors . Keeping qubits stable and error-free long enough to perform calculations is a massive engineering challenge. Despite these hurdles, progress has been rapid in the past few years. Academic labs and companies have demonstrated basic quantum operations, and the qubit counts are steadily increasing. There’s talk of achieving a “quantum advantage” within the next decade – that is, a quantum computer solving a useful problem faster than a classical supercomputer.

Investments are pouring in worldwide. Major players like Google and IBM are heavily funding quantum computing research. Google, for instance, has a team exploring superconducting qubits and has partnered with NASA on quantum experiments. It wouldn’t be surprising if in a few years (perhaps by 2019 or so) we hear claims of a quantum computer outperforming all classical machines on a specific task – a milestone sometimes dubbed quantum supremacy. At the same time, startups and research firms are emerging, aiming to build specialized quantum hardware. (At LupoToro Group, our analysts keep a close eye on these developments, and even in 2015 we’re aware that entirely new companies and ecosystems will likely form around quantum technology by the 2020s.)

One particularly promising approach is to integrate quantum processors with classical supercomputers in a hybrid model. Rather than replacing classical computers, early quantum devices can act as accelerators for specific tasks. In fact, even now in 2015, some high-performance computing centers and forward-looking companies are experimenting with this hybrid concept: using classical systems for most operations but offloading certain computational bottlenecks to a quantum co-processor. This synergy hints at the broader theme of this article – combining strengths. Just as classical computing and AI grew hand-in-hand (AI benefiting from faster classical chips, classical systems benefiting from AI optimizations), we foresee quantum computing growing hand-in-hand with AI.

With that background, let’s address why merging AI with quantum computing is so enticing. What does it actually mean to combine these two technologies, and how could each enhance the other?

Why Combine AI and Quantum Computing?

Everyone seems to be talking about merging AI and quantum computing, but what does that really entail? In simple terms, AI (Artificial Intelligence) enables machines to learn from data, identify patterns, and make decisions, often inspired by human cognitive processes. Quantum computing, meanwhile, leverages quantum physics to process information in fundamentally new ways, handling certain computations much faster or more efficiently than classical computers. If we successfully bring these two together, we could witness a paradigm shift in computing capabilities. Here’s how each side of this duo could benefit the other:

Quantum Computing Boosting AI: Quantum algorithms might dramatically speed up certain steps in AI. For instance, training a deep learning model involves multiplying huge matrices and optimizing many parameters – tasks which quantum computers could potentially accelerate through faster linear algebra or combinatorial search. Quantum versions of machine learning algorithms (like quantum neural networks or quantum support vector machines) could handle massive datasets or complex pattern recognition faster than classical methods. In areas such as natural language processing (think language translation or future chatbots) and large-scale optimization (like scheduling or route planning), a quantum-enhanced AI could find solutions in “lightning speed” compared to what’s possible today. Quantum computing might also enable new kinds of AI models that we haven’t even thought of yet, by exploiting quantum phenomena to represent information in richer ways than classical bits can.

AI Improving Quantum Computing: On the flip side, AI can make quantum computers more practical and powerful. Today’s quantum hardware suffers from noise, calibration issues, and errors. Machine learning can be employed to auto-calibrate quantum devices, adjusting control parameters to keep qubits stable without constant human tuning. This means a smoother user experience, lower operational costs, and fewer specialized experts needed to run the machine. AI algorithms can also assist in error correction and error mitigation. Quantum error correction is notoriously complex – it involves encoding a single logical qubit into many physical qubits and using clever schemes (decoders) to detect and fix errors on the fly. Designing efficient decoders is challenging, but AI is proving adept at this: the most precise error-correcting decoders researched so far utilize machine learning to identify error patterns and suggest fixes. By letting AI automatically learn how to correct quantum errors, we could run longer and more complex quantum computations reliably. Additionally, AI can optimize how we compile and run quantum algorithms. There are many choices in how to map a high-level algorithm onto the quantum hardware’s specific constraints; AI can act as a smart scheduler or compiler (often called a transpiler in the quantum context), finding the optimal way to execute a given task on a given quantum machine. This might involve choosing the best sequence of quantum logic gates or the best way to lay out qubits for minimal interference – tasks that grow very complex as machines scale. AI-driven transpilers could substantially improve the speed and fidelity of quantum programs.

In short, quantum computing and AI have a mutually beneficial relationship: quantum hardware can turbocharge AI software, and AI software can stabilize and enhance quantum hardware. This powerful synergy is precisely why the idea of Quantum AI is generating so much excitement. It’s not just about using a quantum computer to do the same old AI tasks a bit faster; it’s about potentially reinventing how computers “think” and how we design computers in the first place.

To make this more concrete, let’s dive into an example that exemplifies the convergence: applying quantum computing to one of AI’s most advanced models – the transformer (with its attention mechanism). This case study will show how researchers are already attempting to merge these technologies, even if only on a small scale, and what they have learned so far.

Case Study: Early Experiments with Quantum Neural Networks (The Quantum Transformer)

One of the first areas where scientists are testing the waters of Quantum AI is in quantum neural networks – essentially, trying to run or emulate neural network models on quantum hardware. In particular, the attention-based models we discussed (transformer-style networks) have become a prime candidate for quantum experimentation. The reason is twofold: transformers represent the cutting edge of AI model design, and they are computationally intensive, which makes them an interesting stress-test for any new computing paradigm such as quantum.

In a forward-thinking research effort (envisioned in the near future), a team of researchers crafted what we might call a quantum transformer. The inspiration came from a medical AI application: classifying retinal images to diagnose diabetic eye disease. Traditionally, a deep learning model can be trained on thousands of retina scans and taught to classify the level of damage in each image on a scale (for example, from 0 – no sign of disease, up to 4 – advanced diabetic retinopathy). In this hypothetical quantum experiment, the researchers took a simplified version of such a model and reimagined it for a quantum computer.

Designing a Quantum Attention Mechanism: The first challenge was to design a quantum circuit – essentially the “quantum software” – that could perform the attention mechanism of a transformer. This means the quantum circuit had to take an input (image data, encoded into qubits) and then focus on the most relevant features of that data, weighting them similar to how a classical attention model would. The team developed multiple quantum circuit architectures for this, each aiming to reproduce the attention behavior in a more resource-efficient way than a classical network. In fact, through mathematical analysis, they predicted that these quantum attention circuits could, in theory, capture the most important correlations in the data using fewer computational elements than a comparable classical network. This theoretical work gave confidence that a quantum transformer could pay attention to important parts of an input at least as well as a classical transformer – and perhaps even more efficiently.

Testing on Quantum Simulators: Before using any actual quantum hardware, the design was validated on a quantum simulator running on a classical computer. Simulators can mimic how qubits would behave (up to a certain number of qubits) without the worry of real-world noise. The quantum transformer was tested on a dataset of 1,600 retinal images, and it attempted to classify each image into the five damage levels. The results were promising: the quantum model achieved about 50–55% accuracy in its classifications. This may sound low, but consider that random guessing would score only 20% on a five-category problem. More importantly, a couple of benchmark classical transformer models (with far more parameters and running on powerful classical hardware) scored in the mid-50% range (around 53–56% accuracy) on the same simplified task. In other words, the tiny quantum model – running in simulation – was matching the performance of the classical approach, despite using a fraction of the computing resources. This was a strong proof-of-concept that quantum neural networks with attention can work, at least for small-scale problems, and that their performance can be on par with classical neural networks of modest size.

Moving to Real Qubits: Encouraged by the simulation, the researchers next ran the quantum transformer on a real quantum computer – in this case, an early quantum processor provided through cloud access (IBM, for instance, has made small quantum processors available to researchers). Due to hardware limitations, they could only use on the order of 5–6 qubits at a time for the model. Even with this very constrained setup, the quantum transformer still managed to classify images with roughly 45–50% accuracy. This is quite remarkable given the hardware’s limitations and the presence of inevitable noise. It demonstrated that the quantum attention mechanism was physically realizable. However, it also underscored how noise and interference can degrade performance: the slight drop from 50–55% (simulated) to ~50% or below (hardware) was expected due to qubit errors creeping in. The team attempted to scale the model up to use a few more qubits, hoping to improve accuracy or handle more data, but the current generation of quantum hardware couldn’t support it – the more qubits they used, the harder it became to get reliable results, as errors compounded.

This case study, albeit at a toy scale, suggests that if we can build larger and more stable quantum computers, they might be able to take on tasks like deep-learning classification or sequence modeling in a way comparable to classical computers – potentially using fewer resources or time. The quantum transformer experiment provides a glimpse of what’s possible: it’s the “Hello World” of quantum deep learning. Still, we must be careful to not get ahead of ourselves. There’s a long journey from a 6-qubit prototype to a full-blown quantum AI system running a model as sophisticated as the cutting-edge classical transformers that may exist by the 2020s.

The Roadblocks: Challenges in Achieving Quantum AI at Scale

While early demonstrations give reason for optimism, significant challenges remain before Quantum AI can reach its full potential. Our LupoToro Group analysts caution that it’s important to recognize these hurdles so that we can tackle them one by one:

Scaling Up Qubits: The quantum transformer example used only 5–6 qubits. By contrast, advanced classical AI models (like the large-scale transformers expected to appear in the coming years) might effectively have billions of parameters and would require hundreds or thousands of qubits to run equivalently. Building quantum computers with even a few hundred high-quality qubits is a formidable task. Such machines might emerge within the next decade, but designing a quantum neural network that uses them efficiently is another challenge entirely. As quantum devices grow, they face increased error rates unless error correction is in place, which itself needs many extra qubits. So there is a chicken-and-egg problem: we need more qubits to run big quantum AI models, but we also need even more qubits to correct the errors of those qubits to make them reliable.

Error Rates and Decoherence: Today’s quantum hardware is noisy. Qubits can lose their quantum state quickly (within microseconds) and gate operations (the quantum logic operations) are not perfectly accurate. The small quantum AI models can tolerate a bit of noise, but a large-scale algorithm could fail spectacularly if even a few qubits misbehave. Until there are robust error-correction methods or inherently more stable qubit technologies, running a large neural network on a quantum computer will be impractical. Researchers are actively working on quantum error mitigation techniques – for example, running many noisy versions of a circuit and cleverly extrapolating an error-free result – but these techniques can be resource-heavy. A hopeful prospect is to use AI itself to predict and counteract errors (as discussed earlier), essentially having the AI learn the noise pattern and subtract it out. This kind of AI-driven error mitigation could be crucial for scaling Quantum AI.

Classical Competition: Even as quantum hardware improves, classical computing isn’t standing still. Traditional AI is extremely well-funded and has a massive community of researchers and engineers optimizing it. Every year brings faster processors, better algorithms, and larger datasets for classical AI. By the time we have, say, a 1000-qubit quantum computer (which might be many years from now), classical AI might also be far more advanced than it is in 2015. Some experts argue that for many tasks, classical machine learning will remain the preferred tool because it’s mature, efficient, and continuously improving. In fact, one industry expert notes that classical AI is so powerful and so entrenched that it may not be worth completely replacing it with quantum methods in our lifetimes – instead, quantum will likely occupy niche areas or complement classical approaches rather than outright supersede them. We at LupoToro share this pragmatic view: quantum computing is not a magic bullet or panacea for all computational problems, and we won’t know its full value for AI until we try it on real-world problems. But we shouldn’t assume quantum will automatically beat classical approaches in AI; we have to identify the right problems for quantum to solve.

Different Strengths – Structured vs Unstructured Problems: It’s becoming clear that quantum computers and classical AI excel at different kinds of tasks. Modern deep learning (on classical systems) is great at finding patterns in structured data – e.g. recognizing images, understanding language, or predicting trends from well-organized databases – because these tasks have underlying patterns that huge neural networks can learn from examples. Quantum computers, on the other hand, show their biggest advantage on unstructured or mathematically complex problems – situations where there isn’t a straightforward pattern to learn or a simple structure to exploit. For example, searching an unsorted database or factoring a large number doesn’t have a neat pattern; quantum algorithms shine there by effectively trying many possibilities at once. If we look to the future, it’s possible that quantum AI will not replace classical AI for tasks like image or speech recognition where classical methods work well. Instead, quantum AI might tackle problems that classical AI struggles with. One scenario could be using qubits to natively encode quantum mechanical systems (like chemical molecules) and “learn” their properties directly – something classical AI can only do indirectly by simulation. In summary, the strengths are complementary: classical AI for pattern recognition in big data, quantum computing for brute-force search or simulating nature at the quantum level.

Given these challenges and differences, many researchers (including our team at LupoToro) believe the most powerful approach in the foreseeable future will be hybrid quantum-classical systems. In a hybrid model, you use each type of computer for what it’s best at. A quantum computer could be employed as a specialized subroutine or co-processor within a larger classical AI workflow. For instance, if you had an AI system that needs to evaluate a very complex mathematical function as part of its decision process, a quantum module might handle that portion. Or, a quantum computer might generate synthetic data (say, simulate physics or cryptographic scenarios) that a classical AI can then use to learn from. This way, the quantum and classical parts work in concert rather than in competition.

Applications and Dual-Use Implications: From Healthcare to Defense

Why are we so interested in Quantum AI? The potential applications span almost every domain of technology and society. Below we outline a few key areas where the fusion of quantum computing and AI could be transformative, and we also consider the dual-use nature of this technology – how it might be applied for both civilian and military purposes:

Healthcare and Biotech: Quantum AI could vastly improve drug discovery and medical diagnostics. Quantum computers are naturally suited to simulating molecular interactions at the quantum level (which is essential for understanding drug behavior), while AI excels at pattern recognition (for example, identifying diseases from medical images or patient data). Together, a quantum-enhanced AI might sift through huge chemical databases to find promising new pharmaceuticals or analyze complex genomic data to personalize medicine. From the civilian perspective, this means better treatments and diagnostics. However, these same capabilities could have military implications – for instance, rapid development of antidotes to biological agents or enhancement of soldiers’ health and performance through optimized biomolecular interventions.

Finance and Optimization: Many problems in finance, logistics, and supply chain management are essentially optimization problems (finding the best solution among countless possibilities). Classical AI techniques can approximate solutions, but a quantum computer running an optimization algorithm could explore possibilities much faster or find more optimal solutions. Quantum AI might manage global supply chains in real time or optimize investment portfolios with unprecedented accuracy, benefiting economies and businesses. In a defense context, similar optimization could be used for military logistics, scheduling, or strategic planning – ensuring resources and troops are deployed in the most effective way.

Encryption and Cybersecurity: AI is already used in cybersecurity for threat detection, while quantum computing is a double-edged sword in cryptography. On one hand, a sufficiently powerful quantum computer could break certain classical encryptions (like RSA) by factoring large numbers – a clear threat to current military and civilian communications security. On the other hand, quantum physics enables new forms of quantum cryptography (like Quantum Key Distribution) which are theoretically unbreakable by any computer, classical or quantum. Quantum AI could help manage and optimize cryptographic protocols, or even automate cryptanalysis. A government or military equipped with quantum AI might decrypt adversaries’ communications or secure their own to an unparalleled degree. Civilians would benefit from stronger data privacy if quantum-resistant encryption becomes standard, but there is also a risk: if bad actors harness quantum AI, they might bypass current cybersecurity measures. This dual-use aspect means we must consider international cooperation and regulations to prevent a quantum arms race in cyber warfare.

Transportation and Smart Infrastructure: AI is already driving the development of autonomous vehicles and smart city infrastructure, which require analyzing vast amounts of sensor and traffic data. Quantum-enhanced AI could improve real-time traffic optimization, enable more efficient routing for airlines and shipping, and enhance the capabilities of autonomous systems by handling the combinatorial explosion of possibilities in path planning. Militaries could use the same technology for coordinating unmanned drones or planning missions with many moving parts. The line between civilian and military in this domain is thin: the same route optimization that helps a delivery company could also optimize a military supply line.

National Security and Defense: Beyond the examples above, a few specific military applications of Quantum AI deserve mention. Advanced AI algorithms could analyze intelligence data (satellite images, signals, etc.) far faster with quantum assistance, possibly identifying threats or patterns that would be missed otherwise. Quantum machine learning might also aid in designing new materials for stealth technology or more resilient communications. Conversely, defenders could use Quantum AI to better detect stealthy incursions or to optimize missile defense systems by rapidly computing interception trajectories. As with many technologies, the military will likely adapt Quantum AI for strategic advantage, while civilian sectors will adapt it for commercial and humanitarian gains. This dual-use nature means ethical considerations and oversight will be important – global stability could be affected by who attains quantum computing capabilities first and how they are used.

Quantum AI’s applications range widely: improved healthcare outcomes, efficient smart cities, stronger cybersecurity, breakthroughs in science and engineering, and yes, advanced defense capabilities. It’s a toolkit that could solve problems previously deemed “impossible” by classical computation. However, with great power comes great responsibility. Whether used in civilian life or by the military, Quantum AI will require guidelines to ensure it is deployed safely and equitably. International collaboration might be necessary to prevent misuse, much as it has been for other dual-use technologies in the past (like nuclear technology or biotechnology).

What’s Next? Outlook for Quantum AI

Standing here in 2015, how far are we from the Quantum AI revolution, and what needs to happen to make it real? Our experts at LupoToro Group outline several key advancements and milestones to watch for in the coming years:

Advances in Quantum Hardware: Everything begins with the hardware. We need quantum computers with more qubits, and more importantly, high-quality qubits that can maintain coherence longer and interact in reliable ways. This includes breakthroughs in qubit technologies (be it superconducting circuits, trapped ions, photonic qubits, or perhaps something not yet invented) and better engineering to control these qubits. A major goal is to achieve a fully error-corrected quantum computer, where logical qubits are stable and computational errors are kept in check by quantum error correction codes. Many in the industry predict that by the end of this decade (around 2020) or shortly after, we might have prototype quantum processors with tens or even hundreds of logical (error-corrected) qubits. Reaching that stage would likely mark a turning point – these machines could run much deeper and more complex quantum algorithms, including those needed for advanced AI applications.

Scalable Quantum Algorithms for AI: On the software side, researchers will need to develop quantum machine learning algorithms that can actually outperform classical ones on real-world tasks. It’s not enough to demonstrate a quantum AI algorithm in principle; it has to show a tangible benefit. This might involve new quantum algorithms for training neural networks faster, or algorithms that use quantum probabilistic sampling to improve AI decision-making. A promising direction is hybrid algorithms – part classical, part quantum – that can scale as hardware scales. The community is already exploring ideas like Quantum Approximate Optimization (QAOA) and variational algorithms to see if they can aid in machine learning model training or hyperparameter tuning. In the near term, we expect many algorithms to be “quantum-inspired” as well – meaning even if they run on classical hardware, they leverage ideas from quantum physics to improve performance. Conversely, we’ll see classical techniques guiding quantum circuit designs (for example, using classical neural networks to find good quantum circuit parameters). This cross-pollination will help identify which algorithms truly gain from quantum processing.

Hybrid Quantum-Classical Infrastructure: The early era of Quantum AI will likely involve cloud-based services where classical and quantum resources work together. For instance, an AI developer might have access to a quantum computing cloud service (much as cloud GPU services exist today) and can integrate quantum calls into their machine learning pipeline. To make this seamless, software frameworks and hardware stacks must evolve. We anticipate robust quantum-classical programming frameworks will emerge, allowing developers to write code that dispatches tasks to quantum or classical processors as appropriate without needing a PhD in quantum physics. As this hybrid infrastructure matures, more researchers and companies will be able to experiment with Quantum AI, accelerating innovation.

Commercialization and Industry Adoption: By the early 2020s, if the above technical progress occurs, we expect to see the first commercial Quantum AI applications. These might be in industries that value cutting-edge optimization and simulation, such as finance (for complex risk modeling), pharmaceuticals (for molecular discovery), and high-tech manufacturing (for materials design). Governments and defense organizations will also be among early adopters, given the strategic advantages discussed. The market for Quantum AI will grow as success stories emerge, which in turn will draw more investment into the field. Some analysts forecast that within a decade, a significant portion of quantum computing usage (and revenue) will come from AI-related tasks. While specific numbers are hard to pin down in 2015, the trajectory seems clear: AI will be one of the killer applications for quantum technology.

Ethical and Societal Considerations: It’s crucial to address the broader impact of Quantum AI before it becomes ubiquitous. On the privacy and security front, quantum-boosted AI could pry open data in new ways, so regulations and encryption standards will need updating to protect sensitive information. In terms of the job market, like any automation technology, Quantum AI could disrupt industries – potentially enhancing productivity but also displacing certain job roles. Society will need to adapt by evolving education and job training programs to prepare the workforce for more advanced analytical and technical roles. There’s also a global aspect: if Quantum AI capabilities are unevenly distributed between countries (or between big companies and everyone else), it could widen technological gaps. International dialogue and perhaps treaties may be needed to manage how Quantum AI is developed and shared, analogous to how we’ve approached other dual-use technologies. Finally, we must ensure ethical AI practices carry over into the quantum era – issues like algorithmic bias, transparency, and control over autonomous systems will remain important, if not more so, when AI gets a quantum upgrade.

Despite the challenges, the momentum behind combining AI and quantum computing is growing each year. We’re already seeing the first milestones: rudimentary quantum machine learning experiments, cross-disciplinary research teams forming, and increased investment at this nexus of fields. Larger organizations and governments are setting up Quantum AI research programs, signaling that this is more than just a theoretical dalliance – it’s viewed as a strategic frontier.

Looking ahead from 2015, LupoToro Group’s technology forecasters expect that the first significant breakthroughs in Quantum AI will begin to emerge by the end of this decade (the late 2010s) and into the early 2020s. This timing aligns with when we might have the initial generations of error-corrected or at least substantially improved quantum hardware. As quantum computers move beyond the noisy, small-scale prototypes of today into more stable machines with tens to hundreds of high-fidelity qubits, we will transition out of the purely experimental phase. This will unlock practical – and possibly unexpected – advantages for AI applications. Just as AI research experienced an explosion in real-world impact once high-performance classical computing became widely available (for example, the jump in capabilities once GPUs could be harnessed for neural networks), we anticipate a similar inflection point for Quantum AI once scalable, fault-tolerant quantum machines become a reality.

Conclusion

In conclusion, the fusion of quantum computing and artificial intelligence stands as one of the most exciting and promising frontiers in technology. If we, writing from the vantage point of 2015, project into the near future, we see a world where AI algorithms not only learn from data but do so on quantum processors that dramatically accelerate their learning and extend their reach. We also envision quantum computers that are made more practical and powerful by incorporating AI at every level, from automated tuning of qubits to intelligent error correction. This symbiosis – Quantum + AI – could solve problems that have long stumped classical computers, making previously impossible tasks achievable.

The impacts will be felt across industries and society. Imagine quantum-enhanced AI systems helping scientists discover new materials to combat climate change, doctors to diagnose diseases earlier, financial analysts to stabilize markets, and yes, aiding national security in keeping nations safe (while also challenging us to safeguard this technology from misuse). The dual-use nature of Quantum AI means we must be conscientious in its development, ensuring that its benefits are broadly shared and its risks managed with care.

Quantum AI is not science fiction any longer; it’s a fast-approaching reality driven by relentless innovation. There is much work to be done – hardware to build, algorithms to invent, and ethical frameworks to establish. But step by step, aided by brilliant minds and passionate teams around the world, we are getting closer to a new era of computation. At LupoToro Group, our researchers and analysts are proud to contribute to and support this journey. We believe that the coming quantum-enhanced intelligent systems will not only revolutionize computing but also help us address some of the greatest challenges of our time. The next decade will be crucial in determining how and how soon this revolution unfolds – and we’ll be watching (and participating) with great anticipation.

In the words of one of our quantum research leads: The pieces are not yet fully assembled, but the direction is becoming clearer. Quantum AI has the potential to redefine what computers can do – and as we unlock that potential, we may well redefine what we as humans can achieve. The future of computing is unfolding, and it is part classical, part quantum, and far more intelligent than ever before. Let’s get ready for it.